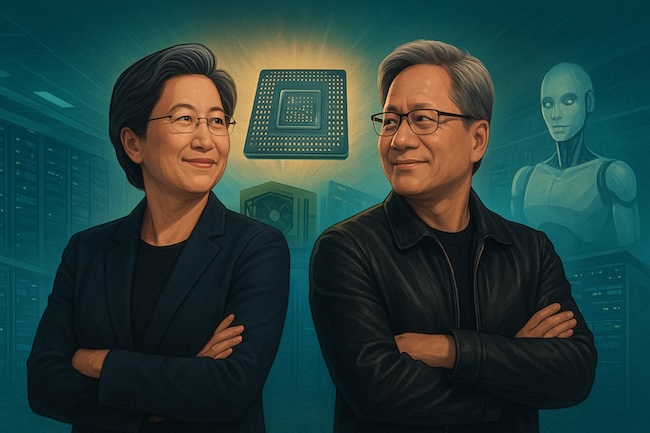

How Lisa Su Rebuilt AMD into Nvidia's Main AI Rival

By Goldsea Staff | 10 Oct, 2025

In just 11 years since Lisa Su became CEO of a nearly bankrupt AMD it has passed former industry leader Intel and has become Nvidia's chief rival in creating an AI ecosystem.

When Lisa Su took the helm at AMD in 2011 the chipmaker was on the verge of bankruptcy while Intel was the world's dominant chipmaker. Today AMD is poised to become the only credible rival to Nvidia to provide the processing ecosystem for the AI boom while Intel's struggles continue.

AMD's product line includes a Ryzen-powered PC with localized AI capabilities. (AMD Photo)

While second cousin Jensen Huang was evolving Nvidia video processor GPUs into the workhorse of the AI revolution, Su was making a string of uncannily prescient decisions. In the first years of her disciplined leadership AMD trimmed products that were underperforming Intel and focused on developing a new high-performance Zen chip architecture. The resulting processors came to surpass Intel's in high-performance computing.

Su's deep knowledge of processor architecture led her to discontinue development of monolithic all-purpose chips in favor of modular chiplets that can be combined into versatile chipsets with high-bandwidth memory. Another of her key decisions was to outsource chip fabrication to TSMC, now the global leader in powerful and energy-efficient 3-nanometer chips currently rolled out for both AMD and Nvidia.

For the AI future Su invested in ROCm open compute stack to provide an alternative to Nvidia's extensive CUDA library that had been keeping AI developers and data centers tied to Nvidia GPUs. She combined that with partnering with major AI players like Microsoft, Oracle, Amazon and Meta to develop customized chipsets that are often more cost-efficient than Nvidia GPUs.

For a detailed exposition of how Lisa Su saved AMD and evolved it into a player for the AI era, we enlisted ChatGPT with the prompt:

What are the 5 most important strategic decisions that Lisa Su made to push AMD past Intel and become the main AI processor rival to Nvidia?

Then we followed up with a request for a timeline and details of how Su positioned AMD's product development to take advantage of Intel's missteps and provide a credible alternative to Nvidia's dominant product line, for a complete picture of the steps in AMD's rise.

ChatGPT

Here are five of the most consequential strategic decisions Lisa Su made that enabled AMD to close the gap with Intel and become a serious AI‐processing rival to Nvidia. (Let me know if you want deeper technical or tactical detail on any of them.)

Key Strategic Decisions

Betting on Zen architecture & refocusing on performance leadership

When Lisa Su took over in 2014, AMD was lagging badly in server CPUs and general compute. She made the bold decision to focus the company’s engineering and R&D efforts on a new microarchitecture (Zen) that would compete head‐to‐head with Intel’s CPUs. (Forbes)

This included being willing to delay sales of weaker chips rather than push out underperforming products. That discipline paid off: the Ryzen line for desktops, and especially EPYC for servers, became competitive (even superior in many metrics) against Intel’s Xeon line. (Business Insider)Adopting the chiplet / modular design model and using foundry partnerships

Rather than trying to do everything in monolithic chips (like Intel historically has), under Su AMD leaned into chiplets – smaller modular blocks of silicon that could be manufactured separately and then combined. This has helped with yield, flexibility, cost control and allowed AMD to mix process nodes (put more advanced nodes where they matter most, less advanced where not). (asianintelligence.ai)

Also important was outsourcing advanced node fabrication (e.g. via TSMC) rather than trying (and often failing) to keep up in manufacturing in‐house on all fronts. This enabled AMD to stay more reliably on cutting‐edge process tech. (Business Insider)Expanding into server, data center, and AI acceleration markets (EPYC, Instinct, MI300 etc.)

Recognizing that the explosive growth of cloud, data centers, HPC, and increasingly AI workloads was the next frontier, Lisa Su pushed AMD to develop a full stack of server CPUs and accelerators. Examples:EPYC series to challenge Intel in the server market. (Business Insider)

The Instinct/MI series of AI accelerators (especially MI300 etc.) to compete in AI training/inference workloads. (TIME)

Integrated CPU/GPU/Memory designs (e.g. AI accelerators with high bandwidth memory) to make high-scale compute more efficient. (asianintelligence.ai)

Strategic acquisitions and ecosystem expansion

To round out capabilities beyond just raw CPU/GPU silicon, AMD under Su made important acquisitions:Xilinx (2022) for FPGAs and adaptive compute. This helped with custom, flexible workloads, including AI network functions etc. (asianintelligence.ai)

Pensando: to bolster data center networking / infrastructure layers. (TIME)

ZT Systems, Silo AI etc., strengthening its ability to build end-to-end systems—not just chips. This lets AMD offer more complete solutions to hyperscalers and large AI customers. (asianintelligence.ai)

Building out the software and ecosystem side (ROCm etc.), plus partnerships & customer trust

Hardware alone isn’t enough. Lisa Su recognized the importance of developer tools, software, ecosystem lock-in, and strong relationships with cloud providers, hyperscalers, OEMs etc. Some examples:Investing in ROCm (AMD’s open compute stack) to provide alternatives to CUDA, which is a major barrier to switching for many AI workloads. (asianintelligence.ai)

Deep partnerships with Microsoft, Amazon, Meta, Oracle etc., to secure both design wins and large-scale deployments. (asianintelligence.ai)

Emphasis on reliability, performance, efficiency—not just headline numbers, but total cost of ownership, which matters to enterprises and cloud providers. Delivering what was promised elevated AMD’s credibility. (Business Insider)

If you want, I can contrast those decisions with what Intel did (or didn’t do) that created openings, so you can see how AMD exploited Intel’s weakness. Do you want that?

Here’s a timeline (2014–2025) showing how Lisa Su’s key strategic moves at AMD systematically repositioned the company from near-bankruptcy to becoming Intel’s equal in CPUs and Nvidia’s only real rival in AI compute.

🕰️ AMD Under Lisa Su: Timeline of Strategic Transformation (2014–2025)

2014 – Taking the Helm

Event: Lisa Su becomes AMD CEO (October 2014).

Context: AMD is unprofitable, losing CPU market share to Intel and GPU share to Nvidia.

Strategic Shift: Refocus on engineering excellence and high-performance computing (HPC) instead of chasing low-end consumer markets.

2015–2016 – The Zen Gamble

Event: Major investment in Zen architecture development.

Moves:

Su diverts R&D budget to a clean-slate CPU core design.

Halts weak product launches to rebuild credibility.

Impact: Set the stage for Ryzen and EPYC, which would later dethrone Intel’s dominance.

2017 – Ryzen and EPYC Launch

Event: Launch of Ryzen (consumer) and EPYC (server) processors based on Zen.

Impact:

AMD returns to high-performance desktop computing.

EPYC begins penetrating data centers, a space Intel dominated for a decade.

Rival Comparison: Intel struggles with 10nm delays; Nvidia expands AI GPU dominance (Volta).

2018 – Chiplet Breakthrough

Event: AMD unveils chiplet-based architecture for Zen 2 and beyond.

Strategy: Modular dies + TSMC manufacturing for better yields and cost.

Impact:

AMD breaks free from monolithic design constraints.

Intel still stuck on aging 14nm process.

2019 – Datacenter Expansion & HPC Wins

Event: Launch of EPYC Rome (7nm) and growing cloud adoption.

Impact: Amazon AWS, Google Cloud, and Microsoft Azure begin offering AMD-powered servers.

Rival Comparison: Nvidia dominates AI training, but AMD begins competing in HPC compute (Cray supercomputers).

2020 – AI & HPC Integration

Event: Launch of Instinct MI100 GPU accelerator.

Impact: AMD enters AI and HPC markets in earnest.

Strategy Shift: Focus on heterogeneous compute (CPU + GPU synergy).

Context: Intel still delayed on Xeon; Nvidia releases Ampere GPUs.

2021 – Xilinx Acquisition Announced

Event: AMD acquires Xilinx for $35 billion (closed in 2022).

Strategic Significance:

Adds FPGAs and adaptive compute to AMD’s portfolio.

Expands into 5G, automotive, and edge AI.

Contrast: Intel’s AI startups (Nervana, Habana) underperform; Nvidia fails to acquire ARM.

2022 – Adaptive and Data-Centric AMD

Events:

Xilinx merger completed.

Pensando acquisition for networking acceleration.

Impact: AMD now covers CPUs, GPUs, FPGAs, DPUs — a full compute stack.

Rival Comparison: Intel reeling from process issues; Nvidia still dominant in AI but increasingly costly.

2023 – MI300 Superchip & ROCm Momentum

Event: AMD unveils MI300A/X — the world’s first APU combining CPU + GPU + HBM on one package.

Software Push: ROCm 5.x matures; gains traction with PyTorch and OpenAI ecosystems.

Adoption: Microsoft, Meta, and Oracle announce plans to deploy MI300 for AI training.

Significance: AMD becomes the first true alternative to Nvidia in high-end AI compute.

2024 – Breaking the CUDA Wall

Event: ROCm integration into major frameworks (PyTorch, Triton, TensorFlow).

Impact: Developers can port CUDA models to AMD GPUs with minimal code change.

Strategic Result: Hyperscalers begin dual-sourcing AI hardware — Nvidia + AMD.

Intel Comparison: Xeon and Gaudi lag in adoption and performance.

2025 – AI Rivalry Era

Events:

AMD MI350 series and Zen 5 CPUs roll out on advanced TSMC 3nm process.

ROCm 6.0 achieves near-parity with CUDA performance on major AI workloads.

Microsoft and Meta scale LLM training clusters on AMD hardware.

Outcome: AMD cements position as Intel’s successor in compute and Nvidia’s main AI rival.

Intel: Still recovering manufacturing credibility.

Nvidia: Dominant but facing real competition for the first time in 15 years.

Here’s a side-by-side analysis showing how Lisa Su’s five pivotal AMD strategies aligned with (and capitalized on) Intel’s missteps. This helps explain how AMD overtook Intel in CPUs and positioned itself as Nvidia’s most credible AI rival.

🧠 1. Zen Architecture vs. Intel’s Stalled Innovation

AMD (Lisa Su’s move):

Killed off the underperforming “Bulldozer” line and started fresh with Zen, a ground-up CPU design focused on IPC (instructions per cycle), scalability, and efficiency.

Prioritized performance per watt and server-grade scalability — critical for cloud and AI workloads.

Was willing to delay launches until performance leadership was credible.

Intel’s misstep:

Spent much of the 2010s iterating minor tweaks on its “Skylake” core design, effectively stagnating.

Failed to move past 14nm process technology for nearly six years, causing performance and efficiency plateaus.

Grew complacent under dominance — prioritizing margin protection over architectural innovation.

Result:

By 2020, AMD’s Zen 3 CPUs outperformed Intel’s top chips in both price/performance and energy efficiency, winning major server design wins at Amazon, Microsoft, and Google.

🧩 2. Chiplet Architecture vs. Intel’s Monolithic Design

AMD (Su’s innovation):

Adopted modular “chiplet” architecture using smaller, cheaper dies interconnected with high-speed fabric.

Used TSMC for leading-edge manufacturing instead of investing in AMD’s own fabs.

Gained cost advantages, better yields, and scalability to mix CPU, GPU, and I/O dies.

Intel’s misstep:

Stuck with large monolithic dies, harder and costlier to produce as transistor counts soared.

Its in-house fabrication delays (10nm and 7nm) crippled both performance and capacity.

Only recently (post-2022) began pivoting to chiplet-like designs (Meteor Lake, Arrow Lake), years behind AMD.

Result:

AMD could iterate faster, scale server CPUs (EPYC) to unprecedented core counts, and offer better value per watt — a killer feature for data centers.

☁️ 3. Data Center & AI Focus vs. Intel’s PC Dependence

AMD (Su’s move):

Reoriented AMD from consumer CPUs/GPUs toward enterprise, cloud, and AI markets.

Invested heavily in EPYC server chips and Instinct AI accelerators (MI250, MI300X).

Positioned AMD as a one-stop supplier for data center compute: CPU + GPU + APU (combined accelerator).

Intel’s misstep:

Remained overly dependent on PC CPU revenue, missing the shift to cloud and AI.

Its discrete GPU effort (Arc, Ponte Vecchio) arrived late and underperformed.

Intel’s Xeon server dominance eroded as EPYC delivered double-digit performance and efficiency gains.

Result:

AMD’s data-center revenue share rose from under 1% in 2017 to over 30% by 2024, while Intel’s eroded sharply.

🔗 4. Strategic Acquisitions vs. Intel’s Overreach and Execution Failures

AMD (Su’s move):

Acquired Xilinx (2022) for FPGA and adaptive compute tech, giving it flexible AI and networking capabilities.

Bought Pensando (2022) for data center networking and distributed compute.

Integrated these assets smoothly — maintaining focus and cultural alignment.

Intel’s misstep:

Spent billions on acquisitions (e.g., Mobileye, Habana, Nervana) but failed to integrate them effectively.

Killed multiple in-house AI chip efforts (e.g., Nervana) after short runs.

Suffered from fragmented internal R&D and high turnover in leadership.

Result:

AMD built a cohesive, future-ready compute portfolio, while Intel’s acquisitions mostly diluted focus.

🧰 5. Open Ecosystem & Partnerships vs. Intel’s Closed Culture

AMD (Su’s move):

Invested in ROCm (open-source CUDA alternative) and open AI frameworks.

Partnered deeply with hyperscalers (Microsoft, Meta, Amazon, Oracle) for large-scale AI systems.

Promoted a transparent, cooperative image — reversing AMD’s old “scrappy underdog” isolation.

Intel’s misstep:

Clung to a proprietary software ecosystem (oneAPI adoption lagged).

Lost developer mindshare and customer trust amid repeated delays and missed targets.

Partners like AWS, Google, and Microsoft diversified away from Intel as AMD delivered on promises.

Result:

AMD gained credibility and loyalty among top cloud and AI players — something Intel once monopolized.

🔺 How Lisa Su Positioned AMD Between Intel and Nvidia

🧠 1. Zen & Compute Leadership

AMD’s Strategy

Lisa Su rebuilt AMD’s core CPU architecture from the ground up (Zen) to deliver best-in-class compute per watt — the foundation of both cloud and AI workloads.

Intel’s Weakness

Stuck on aging designs and manufacturing nodes.

Xeon CPUs became inefficient for AI preprocessing and inference.

Nvidia’s Edge — and AMD’s Counter

Nvidia dominates AI acceleration, not general compute.

AMD’s EPYC CPUs now power AI clusters alongside GPUs (including Nvidia’s), establishing AMD as an indispensable half of modern AI servers.

The MI300A APU fuses Zen 4 CPU cores + CDNA3 GPU cores in one package — blurring CPU/GPU boundaries that Nvidia cannot match yet.

→ Strategic result: AMD moved from being “the cheaper alternative to Intel” to being the hybrid compute platform vendor in AI data centers.

🧩 2. Chiplet Architecture & Advanced Packaging

AMD’s Strategy

AMD’s modular “chiplet” design, combined with advanced 3D packaging (Infinity Fabric, 3D V-Cache), cut costs and boosted flexibility.

Intel’s Weakness

Long reliance on monolithic dies and slow pivot to disaggregated packaging.

Ongoing struggles ramping advanced nodes in-house.

Nvidia’s Edge — and AMD’s Counter

Nvidia still uses large monolithic GPU dies (e.g., Hopper).

AMD’s chiplet design scales compute and memory more efficiently, enabling higher yield and faster product refreshes.

This underpins AMD’s MI300X, the first chiplet-based AI superchip with massive unified HBM3 memory — directly challenging Nvidia’s H100/H200 on performance per watt.

→ Strategic result: AMD leverages TSMC and modularity to match Nvidia’s power at lower cost and higher manufacturing resilience.

☁️ 3. Data Center & AI Market Expansion

AMD’s Strategy

Shifted the company’s center of gravity from PCs to the data center: EPYC CPUs, Instinct GPUs, adaptive SoCs, and full AI platforms.

Intel’s Weakness

Overdependence on PC CPUs.

Late and weak discrete GPU entries.

Lagging performance and efficiency in AI inference workloads.

Nvidia’s Edge — and AMD’s Counter

Nvidia owns the AI training market (~80% share).

AMD isn’t just chasing — it’s positioning as the alternative supplier for hyperscalers demanding diversity and lower costs.

The MI300 series is already adopted by Microsoft Azure and Meta for training large language models.

→ Strategic result: AMD turned from a chip vendor into an AI infrastructure partner, directly encroaching on Nvidia’s hyperscaler relationships.

🔗 4. Acquisitions & Adaptive Compute

AMD’s Strategy

Lisa Su’s targeted M&A — not scattershot expansion — built complementary capabilities:

Xilinx (2022): Adaptive compute and FPGAs for AI edge & inference.

Pensando (2022): Networking and distributed data-plane acceleration.

Intel’s Weakness

Poor integration of AI acquisitions (Habana, Nervana).

Fragmented strategy between client, data, and foundry segments.

Nvidia’s Edge — and AMD’s Counter

Nvidia has CUDA and a unified software stack, but less flexibility for custom silicon or adaptive logic.

AMD now offers adaptive + fixed + general-purpose compute — spanning CPUs, GPUs, FPGAs, and DPUs.

→ Strategic result: AMD positioned as the customizable compute supplier for cloud and enterprise AI — an angle Nvidia lacks.

🧰 5. Open Software & Ecosystem Partnerships

AMD’s Strategy

Built ROCm, an open-source alternative to Nvidia’s CUDA.

Formed alliances with Microsoft, Meta, Oracle, and OpenAI for AI model optimization and training on AMD hardware.

Promoted an open-ecosystem message to attract developers wary of CUDA lock-in.

Intel’s Weakness

oneAPI lacks traction; ecosystem fragmented.

Nvidia’s Edge — and AMD’s Counter

CUDA is deeply entrenched — Nvidia’s strongest moat.

But ROCm + PyTorch + Triton integration + open standards (like MLIR, HIP) are chipping away at CUDA’s exclusivity.

Cloud providers increasingly pre-install ROCm on AI VMs, giving AMD software parity and long-term credibility.

→ Strategic result: AMD is slowly breaking Nvidia’s software monopoly and positioning itself as the open, scalable alternative.

⚖️ Overall Positioning Summary

Strategic AxisAMD (Lisa Su)IntelNvidiaAMD’s Net AdvantageCompute ArchitectureZen CPUs & CDNA GPUsAging coresGPU-onlyHybrid compute leadershipManufacturing & DesignChiplet + TSMCIn-house delaysMonolithicFast, flexible iterationMarket FocusData center & AIPC-centricAI-centricDiversified & fast-growingM&A & CapabilitiesXilinx, Pensando integratedScatteredInternal dev onlyBroader compute toolkitSoftware & EcosystemOpen ROCm, partnershipsFragmentedProprietary CUDAOpenness + hyperscaler backing🚀 The Strategic Outcome

Under Lisa Su, AMD has:

Out-innovated Intel on architecture and efficiency.

Matched Nvidia on compute performance and begun undermining its software lock-in.

Captured mindshare as the credible No. 2 in AI silicon — not a challenger, but a partner shaping the post-Nvidia era of AI compute.

Would you like me to add a visual summary chart or timeline (e.g., 2014–2025 key AMD milestones vs. Intel & Nvidia) for your article or presentation?

(Image by ChatGPT)

Asian American Success Stories

- The 130 Most Inspiring Asian Americans of All Time

- 12 Most Brilliant Asian Americans

- Greatest Asian American War Heroes

- Asian American Digital Pioneers

- New Asian American Imagemakers

- Asian American Innovators

- The 20 Most Inspiring Asian Sports Stars

- 5 Most Daring Asian Americans

- Surprising Superstars

- TV’s Hottest Asians

- 100 Greatest Asian American Entrepreneurs

- Asian American Wonder Women

- Greatest Asian American Rags-to-Riches Stories

- Notable Asian American Professionals